-

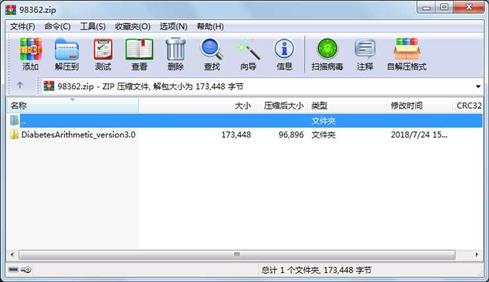

大小:文件類型: .zip金幣: 2下載: 0 次發(fā)布日期: 2024-01-23

- 語(yǔ)言: 其他

- 標(biāo)簽: 隨機(jī)森林??機(jī)器學(xué)習(xí)??邏輯回歸??

資源簡(jiǎn)介

代碼片段和文件信息

#?coding:?utf-8

#?In[1]:

#-*-?coding:utf-8?-*-

import?pandas?as?pd

import?numpy?as?np

import?matplotlib.pyplot?as?plt

get_ipython().run_line_magic(‘matplotlib‘?‘inline‘)

diabetes=pd.read_csv(r‘C:\Users\Administrator\Desktop\diabetes\Machine-Learning-with-Python-master\diabetes.csv‘)

print(diabetes.columns)

#?In[2]:

diabetes.head()

#?In[3]:

#“結(jié)果”是我們將要預(yù)測(cè)的特征,0意味著未患糖尿病,1意味著患有糖尿病。在768個(gè)數(shù)據(jù)點(diǎn)中,500個(gè)被標(biāo)記為0268個(gè)標(biāo)記為1。

print(diabetes.groupby(‘Outcome‘).size())

#?In[4]:

#顯示數(shù)據(jù)的維度

print(“dimennsion?of?diabetes?data:{}“.format(diabetes.shape))

#?In[5]:

import?seaborn?as?sns

sns.countplot(diabetes[‘Outcome‘]label=“Count“)

#?In[7]:

diabetes.info()

#?In[70]:

#首先用knn研究一下是否能夠確認(rèn)模型的復(fù)雜度和精確度之間的關(guān)系

from?sklearn.model_selection?import?train_test_split

x_trainx_testy_trainy_test=train_test_split(diabetes.loc[:diabetes.columns?!=‘Outcome‘]diabetes[‘Outcome‘]stratify=diabetes[‘Outcome‘]random_state=66)

from?sklearn.neighbors?import?KNeighborsClassifier

training_accuracy=[]

test_accuracy=[]

#try?n_neighbors?from?1?to?10

neighbors_settings=range(111)

?

for?n_neighbors?in?neighbors_settings:

????#build?the?model

????knn=KNeighborsClassifier(n_neighbors=n_neighbors)

????knn.fit(x_trainy_train)

????#record?training?set?accuracy

????training_accuracy.append(knn.score(x_trainy_train))

????#record?test?set?accuracy

????test_accuracy.append(knn.score(x_testy_test))

plt.plot(neighbors_settingstraining_accuracylabel=“training?accuracy“)

plt.plot(neighbors_settingstest_accuracylabel=“test?accuracy“)

plt.ylabel(“Accuracy“)

plt.xlabel(“n_neighbors“)

plt.legend()

plt.savefig(‘knn_compare_model‘)

#?In[73]:

#邏輯回歸算法

#正則化參數(shù)C=1(默認(rèn)值)的模型在訓(xùn)練集上準(zhǔn)確度為78%,在測(cè)試集上準(zhǔn)確度為77%。

from?sklearn.?linear_model?import?LogisticRegression?

logreg=LogisticRegression().?fit(x_trainy_train)

print(“Training?set?score:{:.3f}“.?format(logreg.?score(x_train?y_train)))#精確到小數(shù)點(diǎn)后三位

print(“Test?set?score:{:.3f}“.?format(logreg.?score(x_testy_test)))

#?In[74]:

#而將正則化參數(shù)C設(shè)置為100時(shí),模型在訓(xùn)練集上準(zhǔn)確度稍有提高但測(cè)試集上準(zhǔn)確度略降,

#說明較少正則化和更復(fù)雜的模型并不一定會(huì)比默認(rèn)參數(shù)模型的預(yù)測(cè)效果更好。

#所以我們選擇默認(rèn)值C=1

logreg100=LogisticRegression(C=100).?fit(x_trainy_train)

print(“Training?set?accuracy:{:.3f}“.?format(logreg100.?score(x_trainy_train)))

print(“Test?set?accuracy:{:.3f}“.?format(logreg100.?score(x_test?y_test)))

#?In[77]:

#用可視化的方式來看一下用三種不同正則化參數(shù)C所得模型的系數(shù)。

#更強(qiáng)的正則化(C?=?0.001)會(huì)使系數(shù)越來越接近于零。仔細(xì)地看圖,

#我們還能發(fā)現(xiàn)特征“DiabetesPedigreeFunction”(糖尿病遺傳函數(shù))在?C=100?C=1?和C=0.001的情況下?系數(shù)都為正。

#這表明無論是哪個(gè)模型,DiabetesPedigreeFunction(糖尿病遺傳函數(shù))這個(gè)特征值都與樣本為糖尿病是正相關(guān)的。

diabetes_features=[x?for?ix?in?enumerate(diabetes.?columns)?if?i!=8]

plt.?figure(figsize=(86))

plt.?plot(logreg.?coef_.T‘o‘?label=“C=1“)

plt.?plot(logreg100.coef_.T‘^‘?label=“C=100“)

plt.?plot(logreg100.coef_.T‘v‘?label=“C=0.001“)

plt.?xticks(range(diabetes.?shape[1])?diabetes_features?rotation=90)

plt.?hlines(00?diabetes.?shape[1])

plt.?ylim(-55)

plt.?xlabel(“Feature“)

plt.?ylabel(“Coefficient?magnitude“)

plt.?legend()

plt.?savefig(‘1og_coef‘)

#?In[71]:

#決策樹算法

from?sklearn.tree?import?屬性????????????大小?????日期????時(shí)間???名稱

-----------?---------??----------?-----??----

?????文件??????137092??2018-07-24?15:30??DiabetesArithmetic_version3.0\DiabetesArithmetic_version3.0.ipynb

?????文件???????12481??2018-07-24?15:30??DiabetesArithmetic_version3.0\DiabetesArithmetic_version3.0.py

?????文件???????23875??2018-07-21?16:01??DiabetesArithmetic_version3.0\diabetes.csv

?????目錄???????????0??2018-07-24?15:32??DiabetesArithmetic_version3.0\

- 上一篇:win10 可用超級(jí)終端安裝版

- 下一篇:opencat所有資料.zip

評(píng)論

共有 條評(píng)論

川公網(wǎng)安備 51152502000135號(hào)

川公網(wǎng)安備 51152502000135號(hào)